neural spectral methods

overview

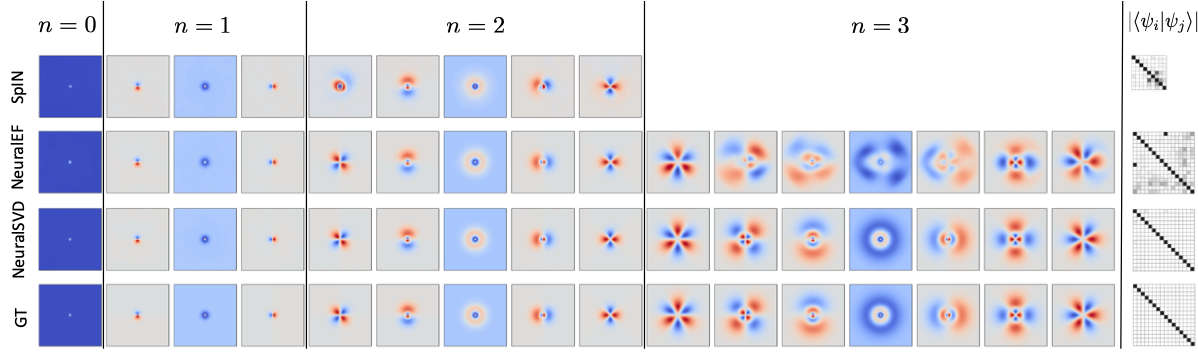

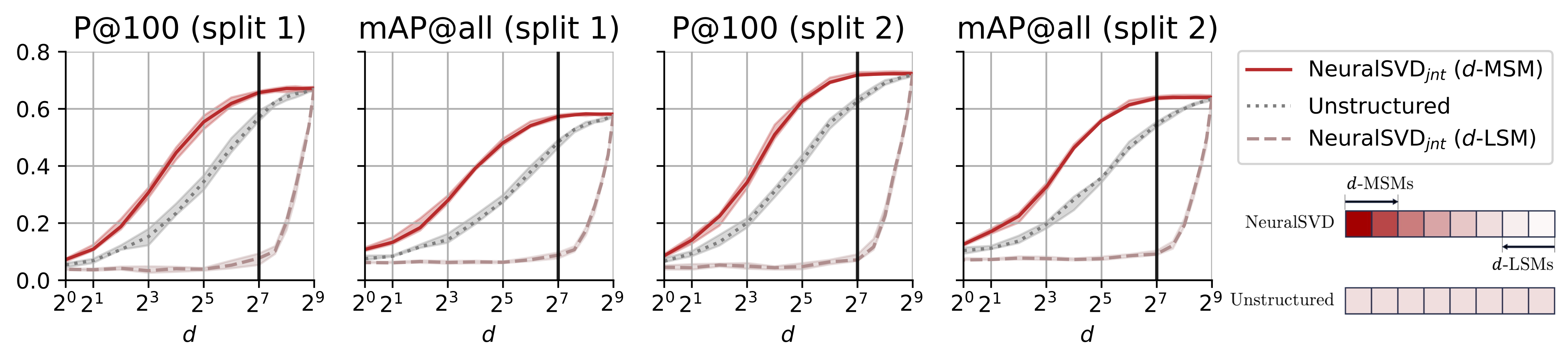

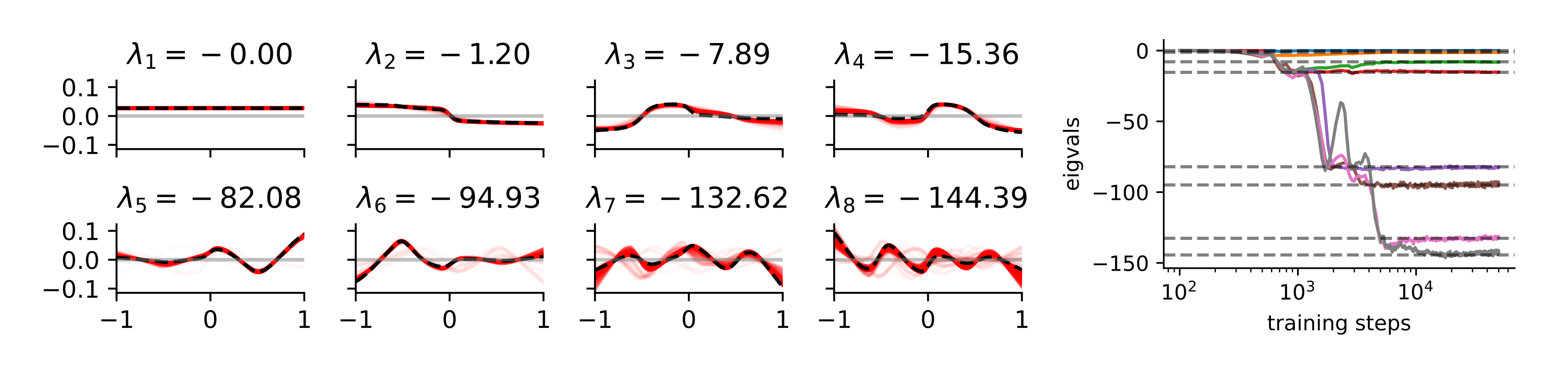

Neural spectral methods aim to learn the dominant spectral structure of linear operators that arise across scientific and machine learning applications, including solving PDEs, analyzing nonlinear dynamics, and learning compact representations. These methods parameterize eigenfunctions with neural networks and train them using variational principles, offering scalability and flexibility beyond classical Galerkin and finite-element approaches.

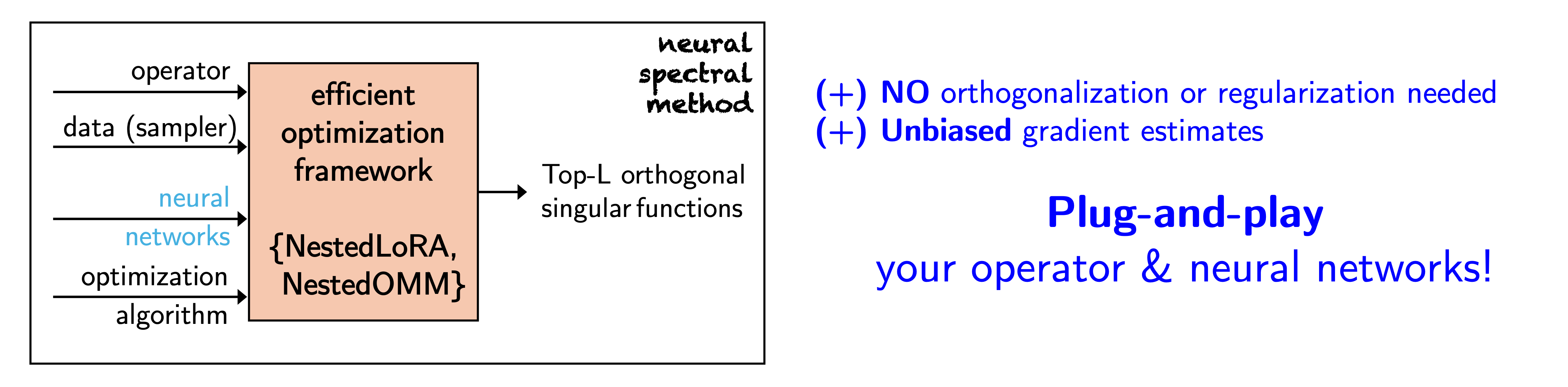

My work develops neural spectral methods through two complementary variational frameworks:

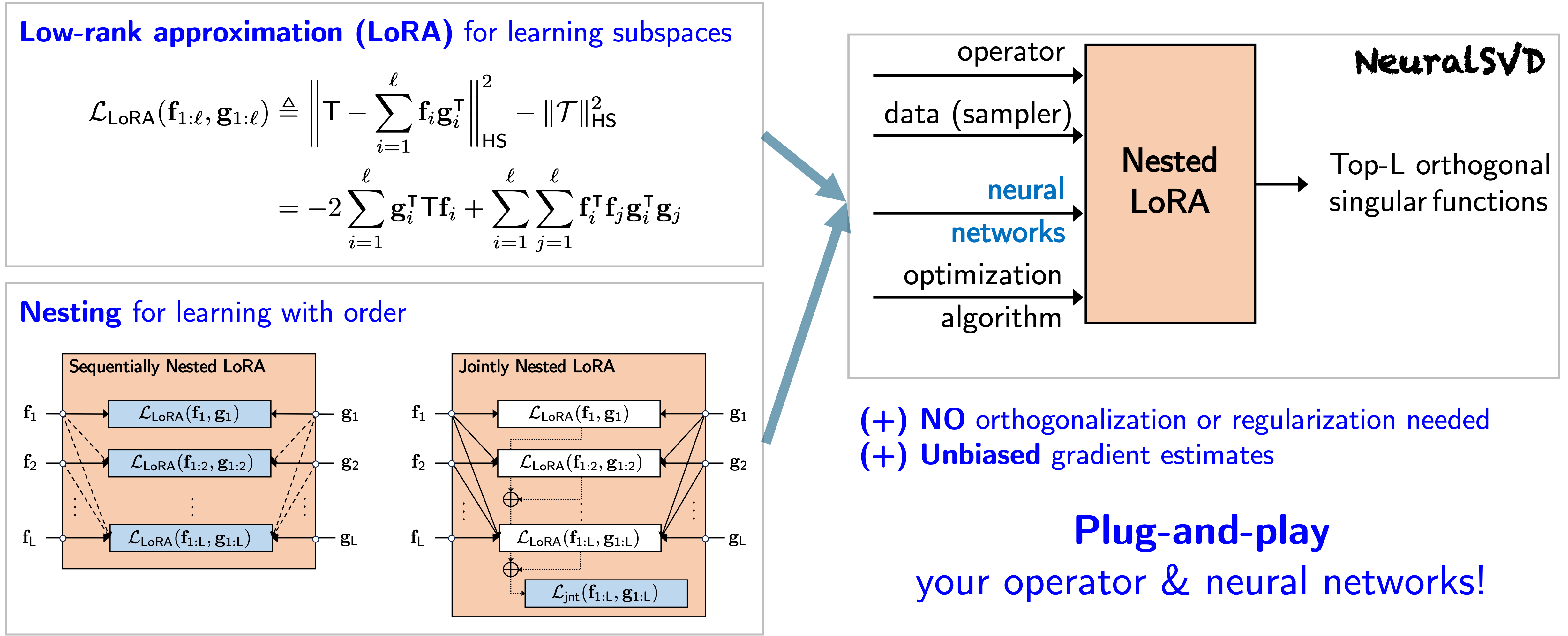

- Low-rank approximation (NestedLoRA / NeuralSVD) for compact operators [1].

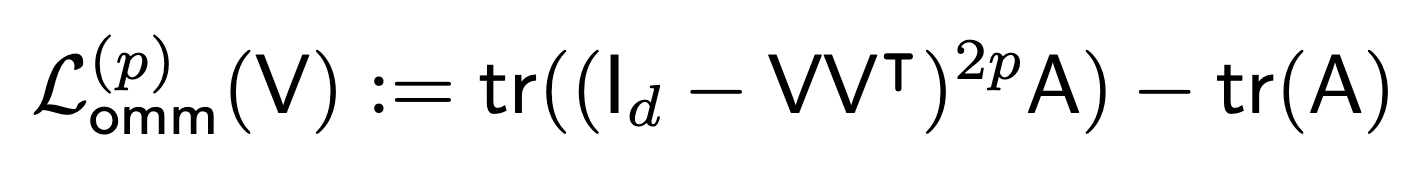

- Orbital minimization methods (NestedOMM) for positive-semidefinite operators [2].

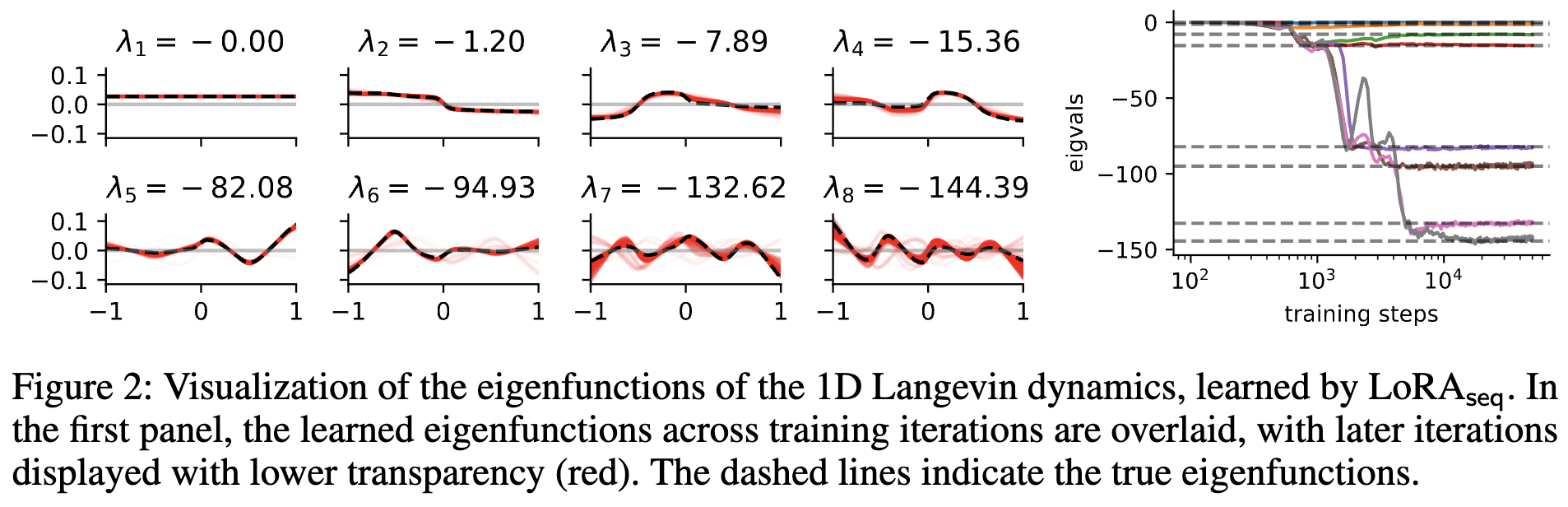

Both proposals use a simple nesting strategy to learn ordered eigenfunctions during training, removing the need for explicit orthogonality constraints and enabling stable optimization. These methods provide improved spectral accuracy, stronger sample efficiency, and robustness across operator learning tasks.

applications

These tools can be applied to solving PDEs as well as representation learning for reinforcement learning and graph data as demonstrated in [1] and [2].

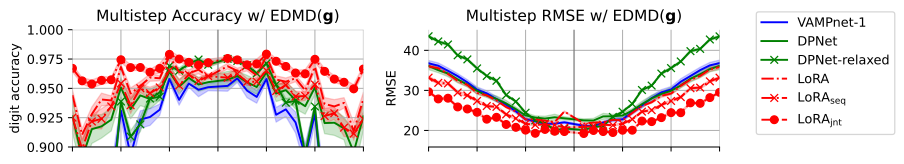

Another important application is Koopman operator learning for nonlinear dynamical systems. In [3], we demonstrate that NestedLoRA (a.k.a. NeuralSVD) learns compact Koopman approximations more accurately and efficiently than VAMPnet and DPNet, enabling better long-horizon prediction and interpretable dynamical modes.

broader perspective

Neural spectral methods sit at the interface of operator theory, numerical linear algebra, information theory, and deep learning.

They provide a structured approach for learning operators in scientific machine learning, and open directions include:

- extensions to higher-dimensional problems;

- convergence analysis of neural spectral methods;

- integration with uncertainty quantification;

- and spectral methods for generative and inverse modeling.

references

2025

-

- Efficient Parametric SVD of Koopman Operator for Stochastic Dynamical SystemsIn NeurIPS, December 2025

2024

- Operator SVD with Neural Networks via Nested Low-Rank ApproximationIn ICML, July 2024